EmbedAnalytics

Artificial Intelligence is not knocking on the door, it is already in our life.

At Embedica, we try hard to build trust into AI implementations. Achieving not only high accuracy, but developing interpretable, explainable and debiased models.

Trust in AI can be handled in subcategories.

EXPLAINABILITY

Businesses endeavor to migrate to Big Data ecosystem and implement AI/ML analytics models and extract high value insights from it.

- Financial institutes decide to approve or reject loan applications.

- Banks proactively try to detect and prevent fraud activities on credit card transactions in real-time.

- Insurance companies determine their fees by running complex AI/ML algorithms against very large datasets.

- In Healthcare, diagnosis at a high critical level are being made.

- The success rate of students are evaluated with AI, forcing them to build a future upon that.

- E-commerce companies build their their future marketing vision on resulting insights of their historical data.

- Government organizations build their future strategies not without relying on AI enpowered predictions.

- Agriculture industries in touch with drones and computer vision become able to detect and cure plant deseases.

It’s not just the numbers. Not at all.

The insights that are made have direct impact on people’s lives. Imagine a person who is falsely diagnosed having cancer. Or worse, having falsely diagnosed not having cancer.

Companies’ profitability, even their existence rely on accuracy AIML model outputs.

An agriculture company which is late to diagnose and cure a plant disease could easily face hard times, including bankruptcy.

In short, the importance of ROI (wheter in profitability terms, in health, or promoting community welfare) demands a high accuray of the insights that is made.

As embedica, developing trust in AI ist our first priority

In their non-linear approach AI/ML models satisfy the need for high accuracy.

But increase in accuracy comes with a price: loss of interpretability and explainability.

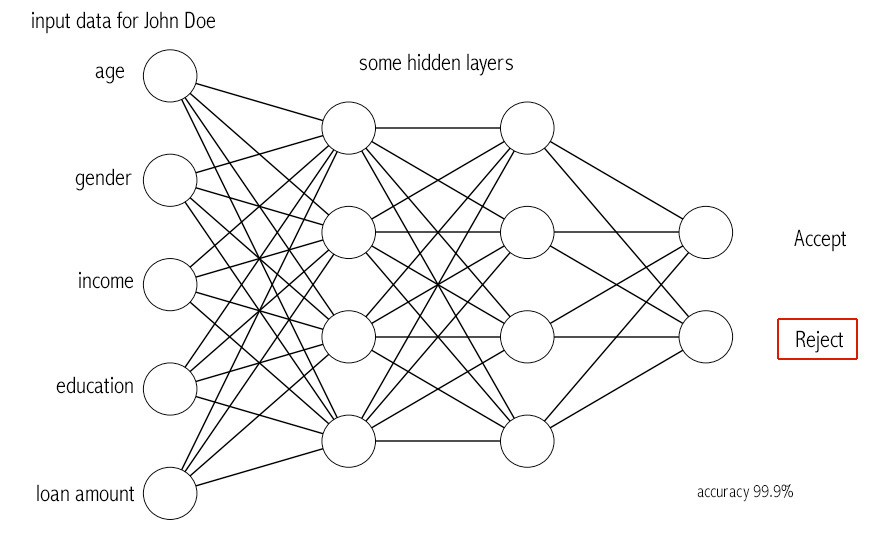

Below is an illustration for a loan application

In this example, the loan application of John Doe has been rejected. The AI model is pretty sure of it’s accuracy and it is probably the case.

The problem here occurs when it comes to explainability of the model’s output: some inputs are given, processed through some hidden layers, activations functions etc. Even though its high accuracy, it is very hard to understand how the model came to its prediction.

SHAP – A framework to explain complex models.

Model explainability and interpretability moved researcher focus on the issue. Scott Lundberg introduced the SHAP model in terms of AI explainability. Based on game theory, the SHAP model calculates Shapley values of each attribute in respect to their contributions to the model output.

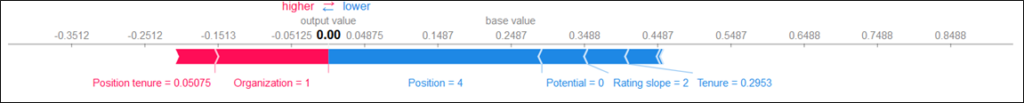

Below is an example of SHAP’s model explanation on an individual value. By calculating Shapley values, it represents different contributions of attributes into the model’s prediction.

The beauty of SHAP lies in its model-agnostic approach. Meaning that any AI model output is subject to SHAP explanation.

With EmbedAnalytics, we not only implement AI models with high accuracy, we also want to achieve explainable models. We want them interpretable to our customers. For this reason we focus our AI implementation always within explainability terms.